Executive Summary

Artificial Intelligence is no longer limited to automating tasks or increasing productivity. It is reshaping how humans think. Through sustained interaction with AI systems, people are externalizing memory, compressing attention, accelerating decisions, narrowing language, and gradually delegating judgment. Over time, AI behaves less like a neutral tool and more like a cognitive environment—one that rewards speed, fluency, and closure while quietly discouraging effort, ambiguity, and deep reflection.

The greatest long-term risk of AI adoption is not job displacement or intelligence loss, but cognitive complacency: the gradual erosion of independent thinking, judgment, and intellectual ownership. This paper provides a people-first, research-grounded framework for understanding AI’s cognitive impacts and explains the conditions under which AI can amplify human cognition rather than replace it.

1. The Quiet Shift No One Prepared Us For

Most technological revolutions were visible.

- Factories reshaped cities.

- Computers reshaped offices.

- The internet reshaped communication.

Artificial Intelligence is different.

It reshapes thinking itself—quietly, efficiently, and often invisibly.

When people ask AI to explain a concept, summarize an argument, compare options, rewrite language, or suggest a decision, they are not merely outsourcing tasks. They are outsourcing cognitive effort. Over time, this alters how the mind approaches problems, tolerates uncertainty, forms beliefs, and assigns value to thinking itself.

Human cognition has always adapted to its environment.

AI has now become part of that environment.

This is not a philosophical concern. It is a practical one.

2. Defining AI Cognitive Impacts (Canonical Definition)

AI cognitive impacts are the short- and long-term changes in human cognition—including memory, attention, judgment, reasoning, creativity, and belief formation—that arise from repeated interaction with artificial intelligence systems.

This is fundamentally different from automation.

- Automation effects change what humans do

- Cognitive impacts change how humans think

Once thinking patterns adapt, reversal is slow. That is why AI’s cognitive effects matter more than most surface-level discussions acknowledge.

3. AI as a Cognitive Environment (Primary Model)

AI is often described as a tool.

That description is incomplete.

A more accurate framing is this:

AI functions as a cognitive environment.

A cognitive environment:

- rewards certain mental behaviors,

- discourages others,

- and reshapes default thinking patterns over time.

Search engines shaped what we looked for.

Social media shaped what we paid attention to.

AI shapes how we think while engaging with information.

Because the human brain optimizes for efficiency, it gradually adapts to whatever feels easiest. AI feels easy.

That is both its strength—and its risk.

4. The Core Cognitive Shifts (Primary Layer)

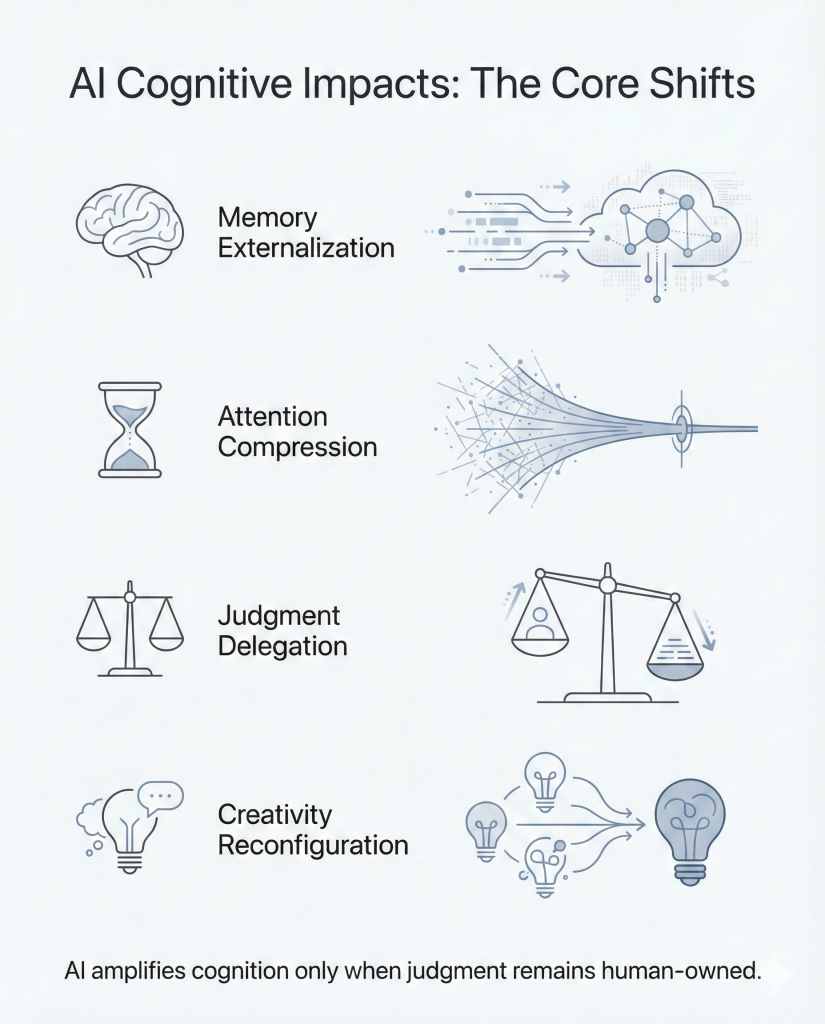

These are the foundational cognitive shifts caused by sustained AI interaction. Everything that follows either deepens or depends on these four.

4.1 Memory Externalization

Mechanism:

AI provides instant recall, explanations, summaries, and structured information on demand.

Cognitive Shift:

From internal memory formation → external memory retrieval

Humans increasingly remember where information exists rather than what the information means. Research on transactive memory shows that when people expect external access to information, they encode it less deeply and prioritize retrieval paths instead

Long-Term Risk:

Loss of contextual depth.

Forgetting facts is not the danger.

Forgetting why facts matter is.

4.2 Attention Compression

Mechanism:

AI systems optimize for speed, clarity, and completion. Ambiguity feels inefficient. Exploration feels unnecessary.

Cognitive Shift:

From sustained focus → rapid resolution

Research on collective attention dynamics shows evidence of accelerating attention cycles and narrowing focus in information-dense environments.

Long-Term Risk:

Reduced tolerance for unresolved problems.

What becomes optional eventually disappears.

4.3 Judgment Delegation

Mechanism:

AI outputs are fluent, confident, and plausible. Humans are neurologically inclined to trust confident language.

Cognitive Shift:

From critical evaluation → surface validation

Stanford research on AI overreliance shows that users defer judgment even when warned, especially when explanations are present.

Long-Term Risk:

Judgment atrophy.

AI does not eliminate bias.

It averages it.

4.4 Creativity Reconfiguration

Mechanism:

AI excels at combinatorial generation—mixing patterns, styles, and ideas at scale.

Cognitive Shift:

From originating ideas → selecting ideas

Long-Term Risk:

Homogenization.

When many creators rely on the same generative systems, outputs converge.

AI multiplies possibilities.

Humans assign meaning.

5. Epistemic Drift: How Truth Becomes Style-Dependent

Epistemic drift occurs when belief formation shifts away from evidence and toward presentation quality.

AI accelerates this shift.

Why This Happens

Humans use cognitive heuristics:

- fluency = competence

- confidence = correctness

- coherence = truth

AI systems are optimized for all three.

As a result, belief formation subtly changes:

Traditional belief formation

Claim → Evidence → Evaluation → Acceptance

AI-mediated belief formation

Claim → Explanation → Acceptance

Evidence becomes implicit. Evaluation becomes optional.

This is not misinformation.

It is misplaced trust.

Stanford governance research emphasizes that where judgment is required, humans must remain accountable—not merely “in the loop”.

6. Decision Velocity vs Decision Quality

AI dramatically increases decision speed.

This is beneficial for:

- drafting

- comparison

- operational decisions

But dangerous for:

- strategy

- ethics

- long-term trade-offs

Speed creates confidence.

Reflection creates wisdom.

When velocity outpaces judgment, mistakes become faster—and more confidently made

7. Cognitive Effort, Learning, and Retention (Primary Layer)

Modern learning science makes one fact unavoidable: effort is not optional for durable thinking.

Human memory depends on effortful encoding and retrieval. When effort is removed, retention weakens—even if understanding feels smooth.

Research on desirable difficulties shows that learning feels harder when it is actually more effective. Robert and Elizabeth Bjork’s distinction between storage strength and retrieval strength explains why ease is misleading

AI removes effort by default.

That accelerates output—but weakens learning.

8. The Dopamine–Ease Loop

AI delivers:

- quick answers

- novelty

- resolution

This activates dopamine-based reward cycles similar to social media, but subtler.

Over time, the brain associates:

- thinking deeply = discomfort

- asking AI = relief

Humans do not lose intelligence.

They lose willingness to struggle.

9. Cognitive Debt (Original Framework)

Cognitive debt accumulates when repeated mental offloading reduces a person’s capacity for independent reasoning.

Like technical debt:

- it feels efficient short-term

- it becomes costly long-term

Symptoms include:

- difficulty forming original positions

- dependence on external validation

- shallow synthesis

Cognitive debt compounds quietly and reveals itself only under complexity.

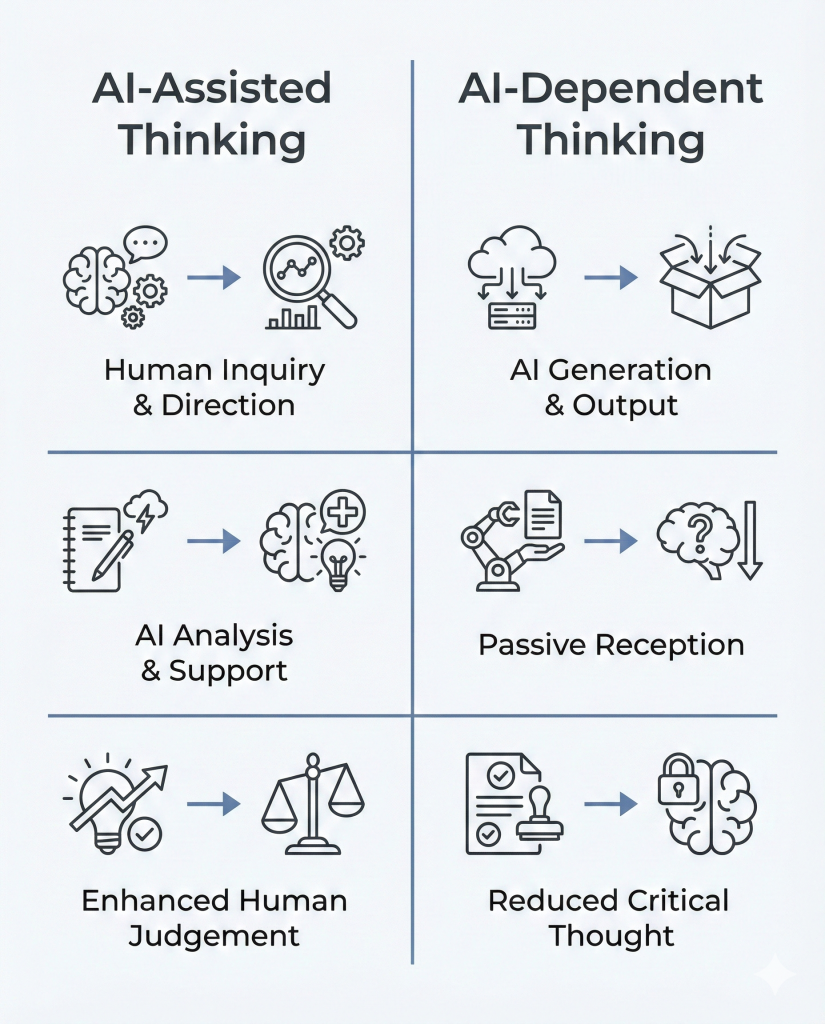

10. AI-Assisted vs AI-Dependent Thinking

| Dimension | AI-Assisted Thinking | AI-Dependent Thinking |

| Role of AI | Amplifier | Substitute |

| Thinking order | Think → Prompt | Prompt → Accept |

| Judgment | Central | Optional |

| Long-term outcome | Growth | Atrophy |

This distinction predicts who gains advantage in the AI era.

11. Cognitive Skill Stratification in the AI Era

AI does not flatten skill gaps.

It widens them.

Strong thinkers use AI to accelerate insight.

Weak habits use AI to avoid effort.

Over time, this produces a real divide:

- people who can reason under uncertainty

- people who can only select among generated options

This is not about IQ.

It is about cognitive posture.

12. Long-Horizon Consequences (10–15 Year View)

Short-term AI benefits are easy to measure. Long-term cognitive consequences are not.

Over a 10–15 year horizon, sustained AI-mediated thinking is likely to produce several systemic effects:

- Knowledge breadth increases, but conceptual depth declines

- Decision velocity rises, while judgment quality plateaus

- Confidence increases, even when understanding is shallow

- Original synthesis becomes rarer outside elite cognitive environments

This does not indicate declining intelligence. It indicates declining cognitive training.

Human cognition improves through repeated exposure to complexity, ambiguity, and effort. When those conditions disappear, capacity erodes—even if output volume increases.

The greatest risk is not AI dominance.

It is human cognitive under-conditioning.

13. Education’s Blind Spot

Most education systems are responding to AI by teaching tool usage.

Very few teach:

- how to verify AI outputs,

- how to think before prompting,

- how to tolerate cognitive struggle.

Learning science consistently shows that effortful retrieval improves durable learning—even when learners feel less confident during the process.

When friction is removed from learning, speed increases—but understanding weakens.

Education that optimizes for convenience risks producing students who are fluent, articulate, and ungrounded.

14. Moral Outsourcing and Ethical Drift

Judgment is not purely cognitive.

It is ethical.

When AI systems are used to:

- justify decisions,

- frame trade-offs,

- validate outcomes,

humans risk moral outsourcing.

Responsibility becomes ambiguous:

“The system suggested it.”

Legal and governance research emphasizes that algorithmic systems must not replace human moral accountability

Without clear ownership, ethical reasoning weakens alongside cognitive effort.

15. Depth Layer I: The Neuroscience of Effort and Memory

Human memory operates through multiple systems, most notably working memory and long-term memory. For information to transfer between them, the brain requires effortful encoding and retrieval.

AI reduces:

- retrieval effort,

- synthesis effort,

- explanatory effort.

As a result, information feels understood but remains fragile.

Research on transactive memory demonstrates that when people expect external access to information, they encode less deeply and remember locations rather than meaning

The brain treats effortless information as disposable.

This does not make AI harmful by default. It makes unbuffered AI use cognitively shallow unless effort is deliberately reintroduced.

16. Depth Layer II: Epistemic Drift — Mechanistic Breakdown

Epistemic drift is mechanical, not philosophical.

Humans rely on heuristics:

- fluency = competence

- confidence = correctness

AI is optimized for both.

Research from Stanford shows that users over-rely on AI outputs even when warned, particularly when explanations are present

Belief formation quietly shifts from:

“Is this true?”

to

“Does this sound right?”

This erodes epistemic discipline even in accurate systems.

17. Depth Layer III: Language Compression and Thought Narrowing

Language shapes thought.

AI language is optimized for:

- clarity,

- closure,

- compression.

Human thought, by contrast, thrives on:

- ambiguity,

- partial ideas,

- unresolved tension.

Cognitive linguistics research shows that language structure influences conceptual framing

When language becomes too clean, thought becomes narrow.

Uncertainty is not confusion.

It is the incubation phase of insight.

18. Boundary Conditions: When AI Improves vs Weakens Cognition

AI improves cognition when:

- Humans think before prompting

- AI is used for range, not conclusions

- Judgment remains human-owned

- Effort is preserved

AI weakens cognition when:

- It becomes the first step in thinking

- Outputs are accepted by default

- Verification is skipped

- Closure is prioritized over understanding

The distinction is behavioral, not technological.

19. Practical Protocol: Using AI Without Losing Cognitive Strength

These are cognitive safeguards—not productivity hacks.

- Think before you prompt

- Force disconfirmation (“What would disprove this?”)

- Separate ideation from decision

- Preserve your voice

- Protect long-form thinking time

AI should be a sparring partner, not a crutch.

20. FAQs

What are AI cognitive impacts?

AI cognitive impacts are the changes in human memory, attention, judgment, reasoning, and creativity that result from repeated interaction with AI systems. These impacts affect how people think, not just what tasks they perform.

Does AI make people less intelligent?

AI does not inherently reduce intelligence, but it can weaken independent thinking if users outsource judgment and effort.

What is cognitive debt?

Cognitive debt is the long-term cost of repeated mental offloading to AI, leading to reduced independent reasoning and shallow synthesis.

How can humans use AI without losing thinking skills?

By thinking before prompting, verifying outputs, preserving effort, and retaining accountability for decisions.

21. Expanded FAQ

Will AI replace human judgment?

No. Judgment under uncertainty remains a human responsibility.

Why do AI answers feel so trustworthy?

Because fluency and confidence trigger cognitive heuristics associated with correctness.

Can AI improve learning?

Yes—when it supports effortful thinking rather than replacing it

22. Canonical Conclusion

Artificial Intelligence reshapes human cognition by externalizing memory, compressing attention, accelerating decisions, narrowing language, and redistributing judgment. The primary long-term risk is not automation or intelligence loss, but cognitive complacency—the gradual abandonment of effortful thinking. Human advantage in the AI era belongs to those who retain judgment, tolerate uncertainty, and use AI as a cognitive amplifier rather than a substitute.